In recent years, the role of social media in shaping public opinion and potentially influencing elections has come under increasing scrutiny. Platforms like X (formerly Twitter) wield significant power by controlling what content reaches the public and how it is prioritized. During the 2016 U.S. election, foreign interference, primarily from Russia, capitalized on social media's reach to manipulate and polarize voters. In contrast, the 2024 U.S. election raises a different but equally concerning question: could algorithmic bias within platforms themselves influence political discourse? This blog explores findings from recent studies on Platform X, comparing the 2024 potential algorithmic bias with the 2016 election’s disinformation tactics to highlight how social media impacts democratic processes.

Understanding Algorithmic Bias in 2024

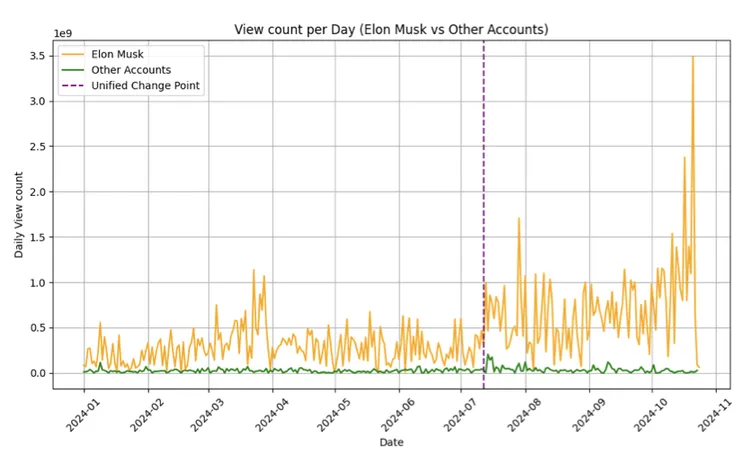

A recent study by researchers Timothy Graham and Mark Andrejevic explored potential algorithmic bias on Platform X during the 2024 U.S. election. Their analysis reveals engagement shifts across high-profile accounts on the platform, suggesting that certain users—most notably Elon Musk and Republican-aligned accounts—received disproportionate visibility boosts around July 13, 2024. This date coincided with Elon Musk’s endorsement of Donald Trump, raising questions about whether platform algorithms played a role in amplifying specific political voices. In the 2024 election, the focus is not on foreign manipulation but on potential algorithmic shifts within social media platforms themselves that could unintentionally or intentionally influence the visibility of particular narratives.

A Look Back: Russian Disinformation in 2016

The 2016 U.S. election saw unprecedented levels of foreign interference, with Russian operatives using social media to spread misinformation, disinformation, and divisive propaganda. These tactics aimed to polarize American voters, manipulate opinions, and erode trust in democratic institutions. Unlike the 2024 concerns over internal platform bias, the 2016 election’s interference was conducted externally, with Russian actors creating fake accounts, utilizing bots, and promoting controversial content to reach massive audiences on social media.

One of the key distinctions between 2016 and 2024 is that the former relied on manipulated content specifically crafted to mislead, whereas in 2024, the bias may stem from platform-controlled algorithms that amplify existing content. However, the core issue remains: how do social media platforms impact voter perception, and what role do they play in elections?

The influence of Russian interference in the 2016 U.S. election has been well-documented and confirmed by multiple intelligence agencies, government reports, and independent research. Key findings indicate that Russian actors aimed to manipulate public opinion, sow discord, and ultimately influence the outcome of the election in favor of certain candidates. Here are the main sources and evidence supporting the reality and scope of this interference:

1. U.S. Intelligence Community Assessment (ICA)

- In January 2017, the U.S. intelligence community, including the CIA, NSA, and FBI, released a joint report concluding with “high confidence” that the Russian government interfered in the 2016 election to boost Donald Trump’s chances and undermine public trust in the electoral process. They identified tactics such as hacking the Democratic National Committee (DNC), releasing stolen emails, and deploying propaganda on social media platforms.

- Source: Office of the Director of National Intelligence (ODNI) Report

2. Special Counsel Robert Mueller’s Report (2019)

- The Mueller Report, led by Special Counsel Robert Mueller, detailed the extent of Russian interference, identifying two main operations: the Russian Internet Research Agency (IRA) and a hacking operation by Russian military intelligence (the GRU).

- The IRA conducted a massive social media campaign, using fake profiles and groups to promote divisive content, fake news, and pro-Trump narratives. Meanwhile, the GRU hacked emails from the DNC and Hillary Clinton’s campaign and leaked them through platforms like WikiLeaks.

- Source: Mueller Report, Volume I

3. Senate Intelligence Committee Report (2020)

- The Senate Intelligence Committee’s bipartisan report on Russian interference, published in five volumes, supported the findings of both the intelligence community and the Mueller investigation. It confirmed that Russian agents actively tried to sway American voters through targeted social media influence campaigns and hacks.

- The committee found that the IRA’s tactics were aimed at amplifying divisions among Americans on issues like race, immigration, and gun control, while promoting certain narratives that favored Trump’s candidacy.

- Source: Senate Intelligence Committee Report

4. Social Media Platforms’ Internal Investigations

- Facebook, Twitter, and Google conducted their investigations and testified before Congress, revealing that Russian operatives had created fake accounts, groups, and ads reaching millions of Americans. Facebook estimated that IRA content may have reached up to 126 million users, while Twitter identified thousands of accounts linked to Russian operatives that tweeted hundreds of thousands of messages during the election.

- Source: The Guardian

5. Independent Studies

- Research from think tanks like the RAND Corporation and the Atlantic Council’s Digital Forensic Research Lab corroborates the findings of government agencies, showing that Russian interference aimed to polarize the U.S. population. RAND’s research highlights how the Kremlin’s use of disinformation campaigns is part of a broader strategy called “active measures,” aimed at destabilizing Western democracies.

- Source: RAND Corporation Report on Russian Influence

Key Takeaways

The cumulative evidence shows that Russian interference in the 2016 U.S. election was real, deliberate, and far-reaching, involving both social media manipulation and cyber intrusions. The goal was not only to influence the election in Trump’s favor but also to create distrust and division within American society, undermining democratic processes. While it is impossible to quantify the exact impact on the election outcome, the evidence confirms that Russian interference was extensive and strategically aimed at affecting the U.S. political landscape.

Comparative Analysis: 2016’s Foreign Manipulation vs. 2024’s Algorithmic Bias

1. Source of Influence

- 2016: Russian interference relied on fake news, sensationalized stories, and divisive memes aimed at polarizing the U.S. electorate. These campaigns exploited social media platforms' lack of regulation, using bots and fake accounts to artificially inflate the reach of misleading content.

- 2024: The concern has shifted to internal biases within platforms themselves. The 2024 study by Graham and Andrejevic suggests that Platform X’s algorithms may have played a role in amplifying certain voices. The analysis revealed that Elon Musk’s account, along with several Republican-aligned accounts, experienced significant boosts in engagement metrics, including view counts, retweets, and likes, around July 13, 2024. This shift may have been due to algorithmic adjustments that favored visibility for certain political viewpoints.

A comparison of Musk’s posts’ views versus those of other accounts, before and after an apparent July change. Screenshot: QUT study

While both 2016 and 2024 highlight social media’s influence on elections, the source of that influence has shifted from foreign interference to potentially internal biases within the platform. The idea that platform-controlled algorithms could selectively amplify specific narratives raises concerns about the role of social media in shaping political discourse.

2. Mechanisms of Amplification

- 2016: Russian operatives crafted fake news articles, sensationalized posts, and divisive content to spread disinformation and create chaos among U.S. voters. This was accomplished through coordinated inauthentic behavior—using bots, fake accounts, and trolls to boost engagement and make fabricated content appear credible. The goal was to prey on social and political divisions, with a focus on issues like immigration, race, and political distrust. In effect, the manipulation was rooted in creating and spreading false information to influence public opinion.

- 2024: In contrast, the potential bias in 2024 stems from algorithmic boosts that increased the visibility of certain accounts. The study on Platform X applied various statistical methods to track engagement metrics across prominent accounts and noted an unusual spike in engagement for Musk’s account and certain Republican-leaning accounts. Unlike in 2016, where content was manufactured to mislead, the 2024 case suggests existing content was selectively amplified based on algorithmic changes within the platform. This algorithmic bias could increase the perceived credibility or popularity of certain viewpoints, potentially influencing voter perception in subtle but significant ways.

In both 2016 and 2024, the mechanisms of amplification were designed to impact visibility and engagement, but while 2016’s approach was overtly manipulative, 2024’s algorithmic influence is less direct and possibly unintended, though no less impactful.

3. Political Polarization and Public Discourse

- 2016: Russian disinformation campaigns were aimed at dividing Americans on contentious issues. By exploiting social media’s reach, these campaigns created echo chambers and fueled polarization. The spread of fabricated news stories and incendiary memes heightened social and political tensions, sowing distrust and shaping narratives around critical issues.

- 2024: The 2024 study shows that algorithmic boosts on Platform X may have contributed to political bias by disproportionately favoring accounts with Republican leanings. For instance, Republican-aligned accounts saw higher view counts post-July 13, suggesting a possible recommendation bias that amplified certain narratives over others. This type of bias could impact public discourse by creating an unbalanced digital landscape, where certain voices appear more prominent or credible than others. Unlike in 2016, where disinformation polarized public opinion directly, the 2024 issue is about selective amplification that could indirectly sway perceptions by giving certain viewpoints a visibility advantage.

Both the 2016 and 2024 situations highlight how increased visibility of specific narratives—whether manipulated content or algorithmically boosted posts—can influence public discourse. The main difference lies in the origin and transparency of the influence, with foreign interference exploiting platform vulnerabilities in 2016, while platform-level decisions drive the engagement patterns in 2024.

4. Transparency and Accountability

- 2016: Social media platforms faced significant backlash after the 2016 election, with many questioning their ability to monitor and control foreign interference. Platforms like Facebook, Twitter, and Google were criticized for failing to detect and prevent the spread of Russian disinformation. In response, these companies increased transparency around political ads, enhanced detection of fake accounts, and introduced stricter content moderation policies. However, these efforts primarily focused on identifying external threats rather than scrutinizing internal algorithms.

- 2024: The study of Platform X in 2024 raises questions about the transparency of platform algorithms and how they might unintentionally or intentionally impact engagement. Unlike the clear-cut issue of fake news in 2016, algorithmic bias is more challenging to identify and quantify. The findings indicate that platform-controlled factors might amplify certain content, yet users lack insight into how these algorithms function. This lack of transparency could undermine trust in social media platforms and fuel concerns about the fairness of digital spaces during elections.

In both 2016 and 2024, the response to these issues underscores a need for accountability. While the 2016 election led to content regulation reforms, the 2024 findings point to the importance of algorithmic transparency to prevent internal biases from shaping political narratives.

5. Regulatory and Ethical Concerns

- 2016: The revelations about Russian interference in 2016 spurred calls for regulatory action, leading lawmakers to push for more oversight of social media platforms. The resulting reforms included transparency measures for political ads, improved detection of fake accounts, and increased collaboration with intelligence agencies to identify disinformation campaigns. These efforts represented a shift toward external threat management, aiming to prevent foreign manipulation of U.S. elections.

- 2024: In 2024, the regulatory focus has expanded to address internal factors like algorithmic accountability. The Graham and Andrejevic study on Platform X raises ethical questions about how platform algorithms may influence engagement metrics during elections. The findings have sparked calls for algorithmic transparency laws to prevent unintended biases from affecting political discourse. As social media becomes increasingly central to public life, ensuring that platform algorithms operate fairly and transparently is essential to maintaining trust in democratic processes.

Both elections underscore the need for robust regulation to address the evolving challenges of social media. While the 2016 election led to reforms focused on countering external threats, the 2024 findings highlight the importance of algorithmic accountability and the ethical considerations surrounding internal platform decisions.

Implications for the Future of Social Media and Democracy

The lessons from the 2016 and 2024 U.S. elections reveal that social media can be both a tool for free expression and a potential influence on democratic processes. The evolution from external disinformation campaigns to potential algorithmic biases highlights the need for platforms to operate with transparency and fairness, especially during sensitive times like elections.

As we move forward, several key considerations emerge:

- Algorithmic Transparency: Understanding how algorithms influence engagement is critical for ensuring fair digital spaces. Platforms should provide more transparency about how their algorithms function, especially if they impact public discourse during elections.

- Regulatory Oversight: Just as social media companies were held accountable for foreign interference after the 2016 election, there is a growing need for regulatory oversight of algorithmic practices. Laws that require transparency in algorithmic decisions and hold platforms accountable for unintended biases could help mitigate issues similar to those seen in 2024.

- Public Awareness and Media Literacy: Increasing public awareness of how social media algorithms work can empower users to critically evaluate content. By understanding that certain narratives may be amplified by algorithms, users can make more informed decisions about the information they consume.

- Ethical Standards for Platforms: Social media platforms should adopt ethical standards that prioritize balanced engagement, especially in politically sensitive contexts. This includes taking steps to prevent unintended biases and ensuring that algorithmic adjustments do not favor specific viewpoints.

- Ongoing Research: The findings from the 2024 study indicate that algorithmic bias is a complex issue that warrants further investigation. By continuing to study how social media algorithms influence engagement, researchers can provide valuable insights that inform regulatory efforts and platform policies.

Conclusion

The impact of social media on elections is undeniable, whether through foreign interference or potential algorithmic biases within platforms. The 2016 U.S. election highlighted the dangers of disinformation, while the 2024 election underscores the influence of platform algorithms. Both cases reveal how social media platforms can shape public perception, intentionally or unintentionally, raising questions about the role of these platforms in democratic processes.

As we grapple with these challenges, it is crucial to advocate for transparency, accountability, and ethical standards that ensure fair and balanced engagement. Social media has the potential to foster informed public discourse, but it also carries the risk of bias and manipulation. By addressing these issues, we can work toward a future where social media serves as a trustworthy space for democratic expression.

Source:

- Graham, Timothy & Andrejevic, Mark (2024). "A Computational Analysis of Potential Algorithmic Bias on Platform X During the 2024 U.S. Election"

Queensland University of Technology Repository

Link to Source - "The Tactics & Tropes of the Internet Research Agency" (2018)

The New York Times

Link to Source - "Russian Social Media Influence: Understanding Russian Propaganda in Eastern Europe"

RAND Corporation Report – Provides an analysis of Russian disinformation strategies and social media influence operations.

Link to Source - DiResta, Renée et al. (2018). "The Tactics & Tropes of the Internet Research Agency"

New Knowledge Report – Analyzes how the Russian Internet Research Agency used social media to influence the 2016 U.S. election.

Link to Source - "2019 Annual Report of the Global Engagement Center: Global Engagement Center’s Role in Countering Russian Influence Operations"

U.S. Department of State Report – Discusses how the U.S. government is addressing disinformation and foreign interference.

Link to Source - "Algorithmic Bias Detection and Mitigation: Best Practices and Policies to Reduce Consumer Harms" (2021)

Brookings Institution Report – Provides insights into algorithmic bias and its societal impact, including in media and political contexts.

Link to Source