When Bad Bunny stepped onto the Super Bowl LX halftime stage, the outcome was never going to be neutral. The Super Bowl halftime show is not just a performance slot — it is one of the most scrutinized cultural platforms in the world, watched by hundreds of millions, analyzed by advertisers, politicians, critics, and fans in real time.

What unfolded was not a crowd-pleasing medley designed to offend no one. Instead, Bad Bunny delivered a performance rooted firmly in his language, his culture, and his identity. Within minutes, the show sparked praise, backlash, political commentary, and global debate — turning a 13-minute set into a full-blown cultural moment.

A Halftime Show That Refused to Play It Safe

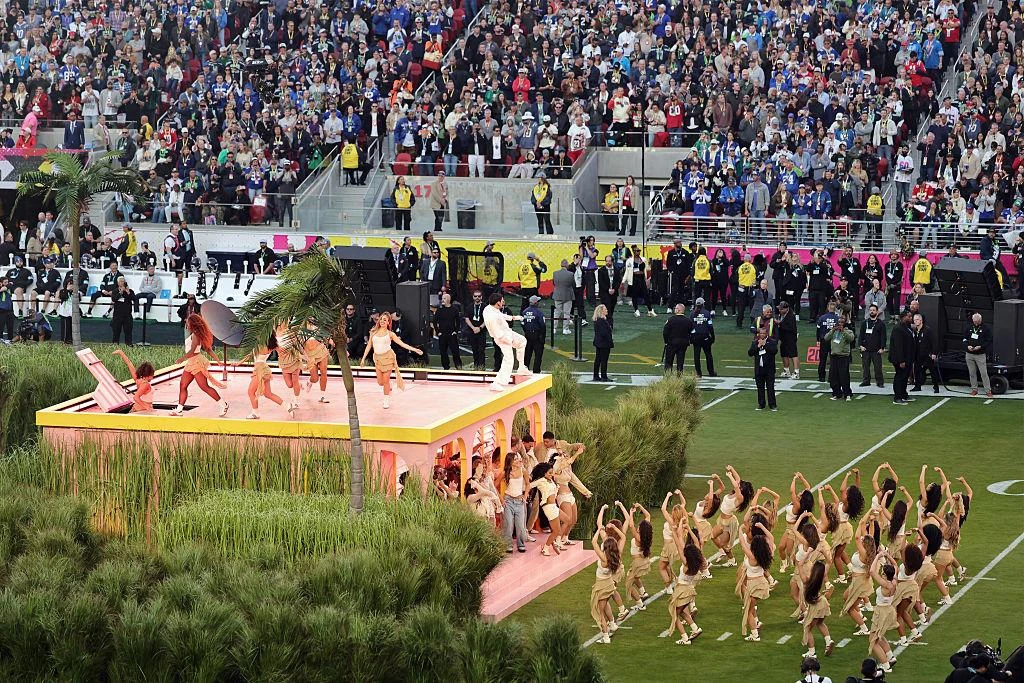

From the opening seconds, it was clear that Bad Bunny was not there to conform to traditional expectations of what a halftime show “should” look or sound like. The music was performed entirely in Spanish. The visuals leaned heavily into Puerto Rican and Latin American imagery. The choreography reflected street culture rather than stadium spectacle.

This approach marked a sharp departure from the typical halftime formula, which often prioritizes familiarity and broad appeal over specificity. Instead of chasing nostalgia or crossover hits, Bad Bunny chose authenticity — a decision that would ultimately define both the praise and criticism that followed.

Immediate Reaction: Applause, Backlash, and Headlines

The reaction was immediate and intense.

Supporters praised the performance as historic, calling it a long-overdue moment of representation for Spanish-speaking and Latino audiences. Social media platforms lit up with clips, commentary, and emotional responses from viewers who felt seen on a stage that has traditionally excluded them.

At the same time, critics labeled the show “divisive,” “political,” or even “un-American.” Some objected to the use of Spanish. Others expressed discomfort with cultural symbols they didn’t recognize or understand. The backlash itself quickly became part of the story, fueling even more coverage and discussion.

In news terms, this reaction pattern is a clear marker of success. Performances that fade quietly do not dominate headlines. This one did.

Measuring Success Beyond Ratings

While television ratings and streaming numbers confirmed strong viewership, the real measure of success lay elsewhere. The performance generated sustained media coverage well beyond game night. It dominated entertainment news, opinion columns, political commentary, and cultural analysis.

Search trends spiked for terms related to Bad Bunny, the halftime show, and Puerto Rican culture. Clips circulated globally, reaching audiences far outside the NFL’s traditional demographic. For a halftime show, this level of extended relevance is rare.

Success, in this case, wasn’t about universal approval. It was about impact.

The Core Message: Visibility Without Apology

The message of the performance was not delivered through speeches or slogans. It was embedded in the choices Bad Bunny made.

Performing entirely in Spanish was not a rejection of the audience — it was a statement that Spanish-speaking culture does not need translation or validation to belong on the biggest stage in American sports. The visuals reinforced this idea, drawing from everyday life, street aesthetics, and cultural symbols familiar to millions but rarely centered at events of this scale.

Rather than blending cultures into something neutral, the show insisted on specificity. That insistence was the message.

Puerto Rico at the Center of the Story

For Puerto Rico, the halftime show carried special weight. Bad Bunny has long positioned himself as an artist deeply connected to the island’s identity, struggles, and pride. By bringing that identity to the halftime stage, he elevated Puerto Rico from a background reference to the focal point of a global broadcast.

Reports of collective reactions across the island — from public watch parties to spontaneous applause — underscored how meaningful the moment was for many Puerto Ricans. It was not seen as a personal achievement, but as a shared one.

Why Some Viewers Felt Uncomfortable

Much of the criticism aimed at the show centered on discomfort rather than concrete objections. For some viewers, hearing Spanish dominate a traditionally English-language broadcast felt unfamiliar. For others, the absence of overt gestures toward mainstream American pop culture created a sense of exclusion.

This reaction reveals more about audience expectations than about the performance itself. The Super Bowl halftime show has long been treated as a cultural mirror — and mirrors can unsettle when they reflect a version of America that challenges long-held assumptions.

Is Cultural Representation Political?

A recurring critique was that the performance felt “political.” Yet the show did not endorse policies, candidates, or parties. What it did was assert visibility.

In a polarized climate, visibility itself is often interpreted as a political act. When marginalized or underrepresented cultures appear unapologetically in mainstream spaces, that presence can be perceived as a challenge to existing norms.

Bad Bunny did not politicize the stage. The reaction politicized the visibility.

Unity Without Erasing Difference

Toward the end of the performance, visual cues emphasized unity across cultures and borders. Rather than framing unity as sameness, the show suggested a broader definition — one where differences coexist without being diluted.

This approach stood in contrast to the more sanitized messages of unity often seen at large-scale events. It suggested that inclusion does not require cultural compromise.

A Shift in What the Halftime Show Can Be

Historically, halftime shows have relied on familiar formulas: English-language hits, cross-generational appeal, and minimal cultural risk. Bad Bunny’s performance disrupted that formula.

By succeeding without conforming, the show expanded the definition of what a halftime performance can be. It demonstrated that cultural specificity can resonate at scale, and that authenticity can generate engagement equal to — or greater than — traditional crowd-pleasing tactics.

Long-Term Impact and Legacy

The true legacy of the performance will not be measured immediately. Its influence will be seen in future halftime selections, production choices, and willingness to feature artists who do not fit the traditional mold.

Already, the show is being referenced in discussions about representation, language, and the evolving definition of American culture. That alone places it among the most consequential halftime performances in recent history.

Final Assessment

Bad Bunny’s halftime show succeeded precisely because it refused to chase approval.

It commanded attention, sparked conversation, and challenged assumptions — all while remaining grounded in the artist’s identity. In a media landscape saturated with forgettable spectacle, the performance stood out by meaning something.

As a news event, it did exactly what impactful cultural moments do: it revealed fault lines, amplified voices, and left a lasting mark long after the lights went down.